Proxmox VE is an excellent system for managing virtualised services and configuring hardware for high availability, but when my Proxmox host became responsible for actually important tasks I lost the ability to tinker destructively with it for fun and education. because of this I wanted to set up a new cluster as a lab environment, and this time I decided to use ESXi and vCenter/vSphere.

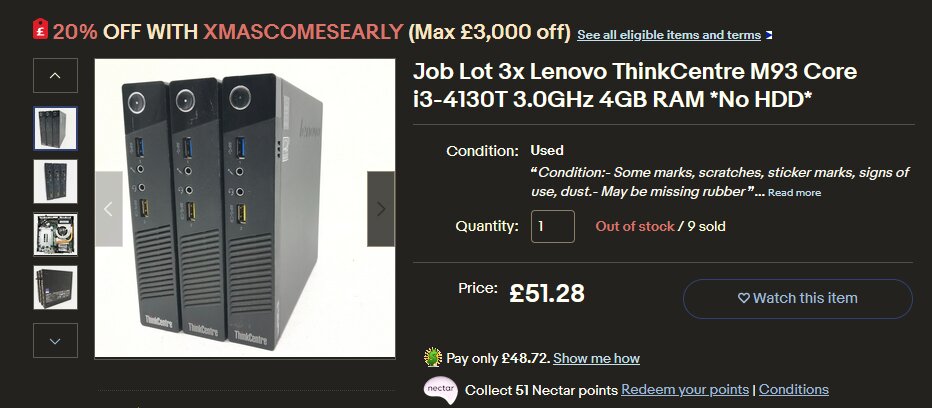

I found an eBay listing for three Lenovo ThinkCentre M93 PCs. these would become the three hosts in the cluster. with the festive discount and free shipping, these were impressively cheap while still being just about capable of doing what I wanted them to do.

in order to run vCenter, at least one node will need at the very least 8GB of memory. bargainhardware.co.uk sell compatible SODIMMs very cheaply so I upgraded all three nodes to 8GB for £28, bringing the hardware total to around £75. for a few extra quid it will be possible to give each node 12 or 16GB in the future if desired.

here they are with the RAM fitted, and some 80GB Intel S3500 SSDs I had lying around. these are old and small, but reliable and sufficient for these purposes.

the excellent cheapness here can't be argued with, but there are some obvious limitations with regards to adding more storage and peripherals. it might be possible to add a second NIC to these machines by adapting the Mini PCIe slot, but other than that the only option is USB. while I'm aware that using USB for things like VM storage is bad for both speed and reliablity, it is something I might experiment with here for the sake of education. the i3 can likely be upgraded to a 4c/8t Xeon (E3 1270 v3) for an additional £15 per node. these have a significantly higher thermal design power than the officially supported chips though - it would be interesting to test this.

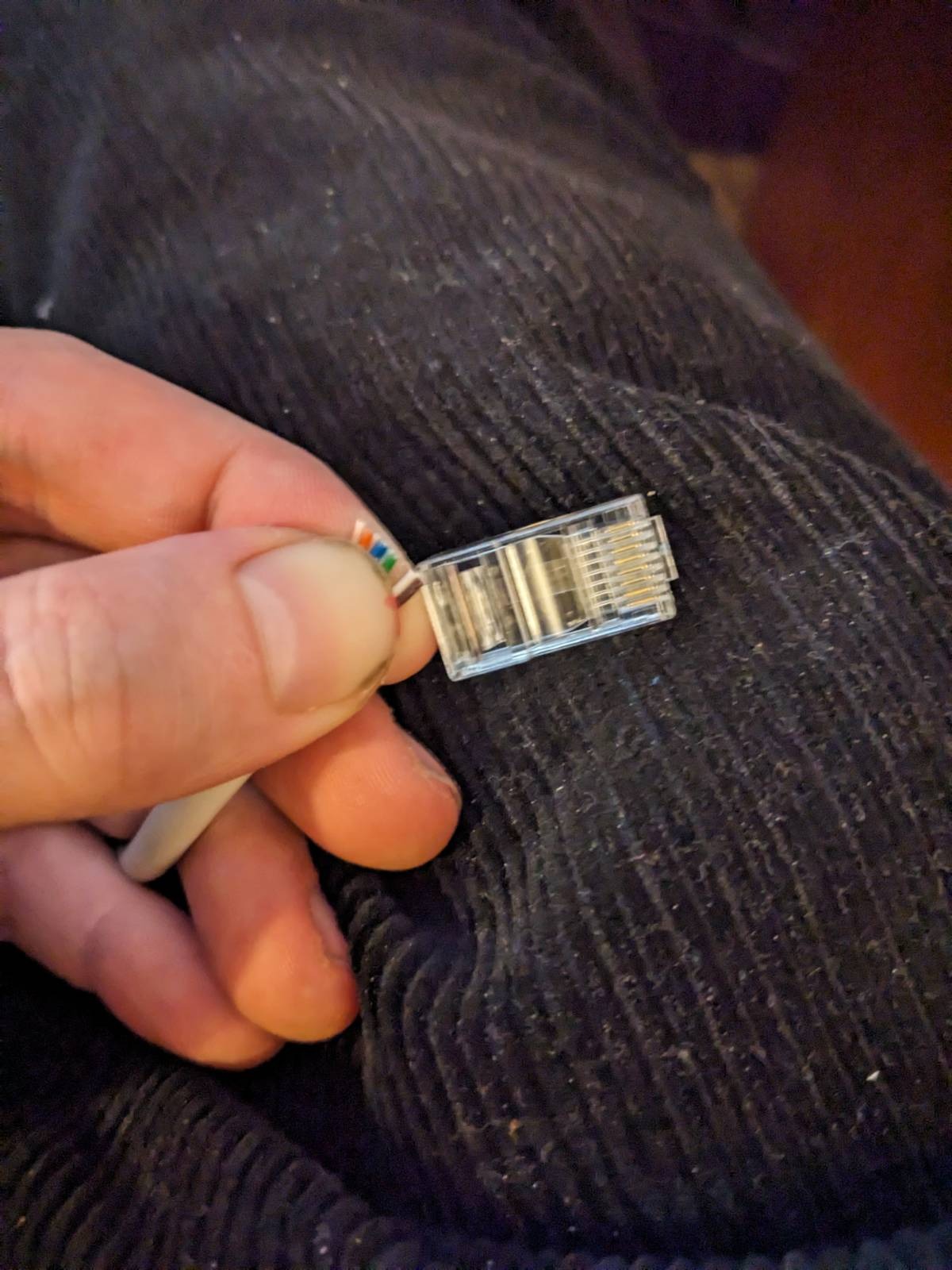

an ESXi 8 iso can be downloaded from the VMware website after some faffing with accounts and licences, and it installs with no issues from a flash drive. after setting each node to use NTP to get their time, turning on the Intel virtualisation features in BIOS, and giving them static IP addresses and sensible hostnames it was time to install vCenter server. this is available from the VMware website too, but installing it on this hardware involved a bit of trickery. vCenter server comes as a ~10GB .iso, which can be mounted on a workstation and installed on an ESXi node over the network using a bundled installer for Windows or Linux. the problem is that by default, vCenter requires 14GB of RAM as a minimum for the management VM. it's possible to change this number to something lower, but this involves extracting the contents of the .iso and modifying them.

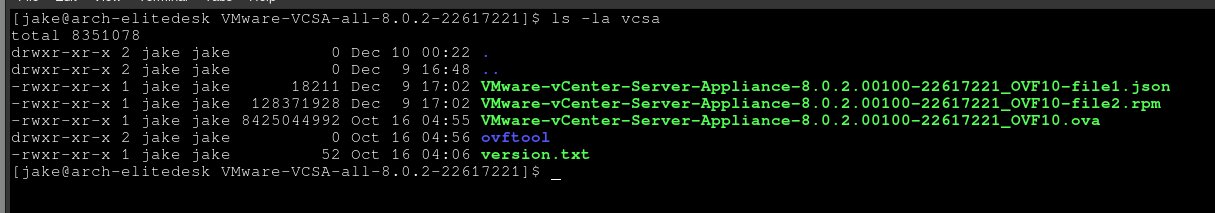

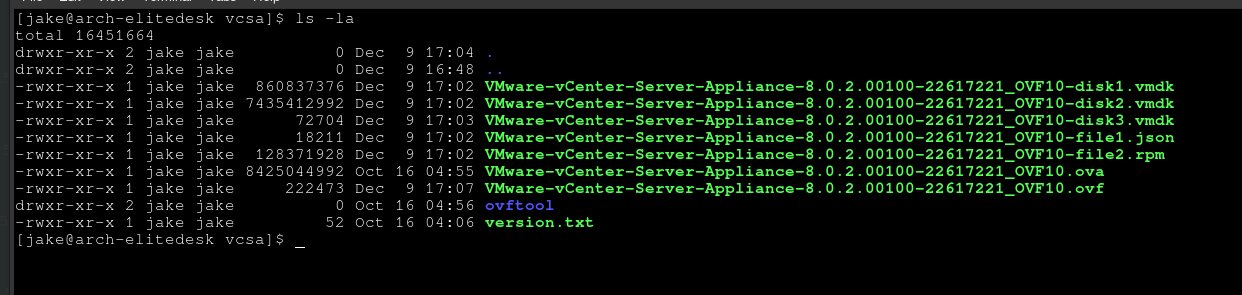

after unpacking the the vCenter .iso to a directory, it was possible to use the bundled ovftool program to create a .ovf config file and .vmdk images from the .ova found in the vcsa directory at the root of the .iso. the ovftool binaries for Windows and Linux can be found in vcsa/ovftool.

the following command creates an .ovf and the .vmdk files from the .ova. note: if using the bundled ovftool binary, it will be necessary to qualify the path to it.

[/bin/bash]$ ovftool VMware-vCenter-Server-Appliance-(version_number).ova VMware-vCenter-Server-Appliance-(version_number).ovf

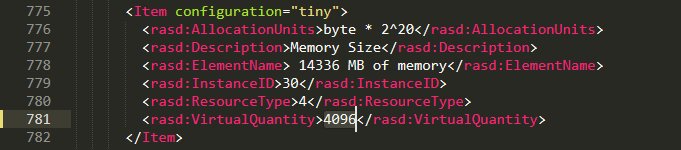

this creates the .ovf file and some vmdk images in the vcsa directory (or wherever we ran the command from), along with a VMware-vCenter-Server-Appliance-(version_number).mf manfest file which should be deleted - it would conflict with our modifications. in VMware-vCenter-Server-Appliance-(version_number).ovf I modified this value at line 781, changing the RAM requirement of the VM from 14336MB to 4096. don't do this in production.

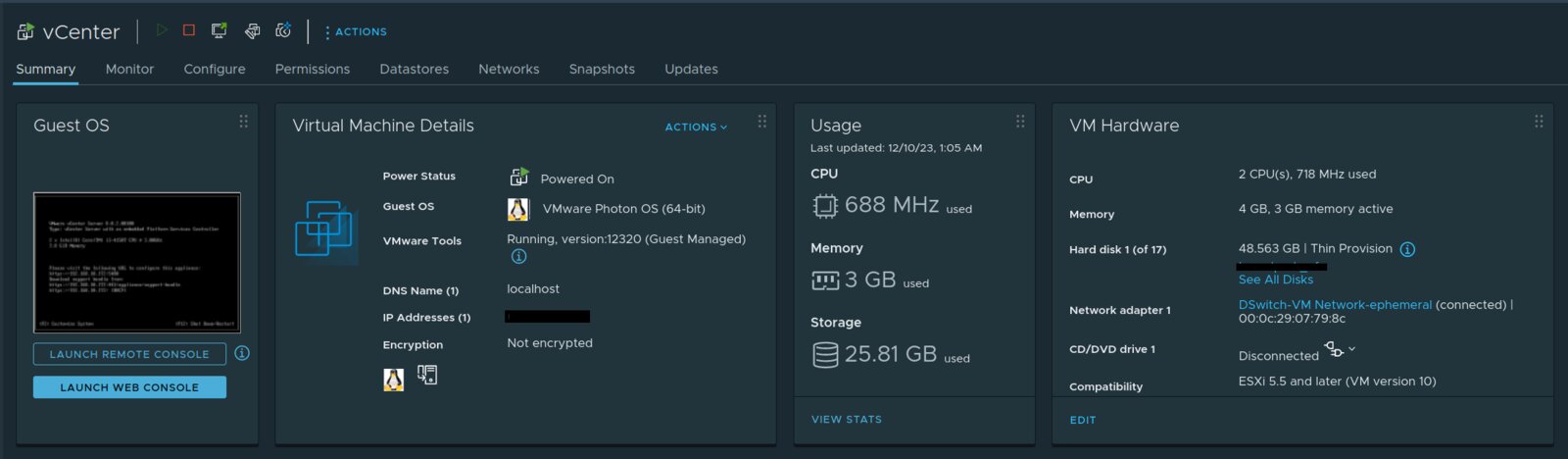

this modified .ovf file can then be used to deploy vCenter as .vmdk images to the first node using VMware Workstation Pro. I didn't keep a record of this process, but it all happens through the VMware Workstation GUI and is quite intuitive. it may also be possible to repackage the .iso with the modified RAM value and deploy it using the packaged installer, but I decided against trying.

after deploying, vCenter features including HA cluster management appear to work okay with the 4096MB cap, but it would definitely be preferable to give it more RAM in future. I'm not exactly sure what would go wrong first when vCenter starts running out of memory but it presumably wouldn't be great.

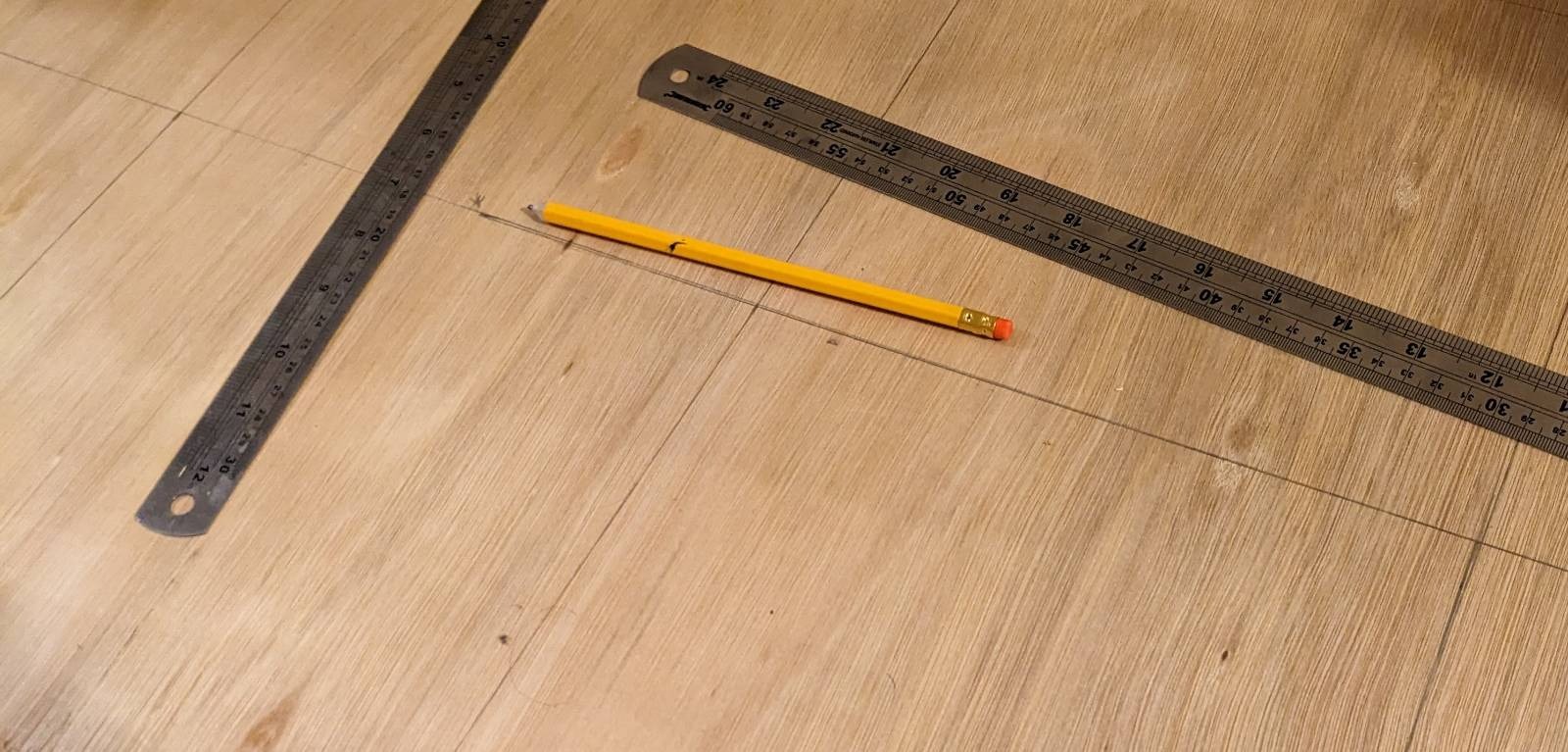

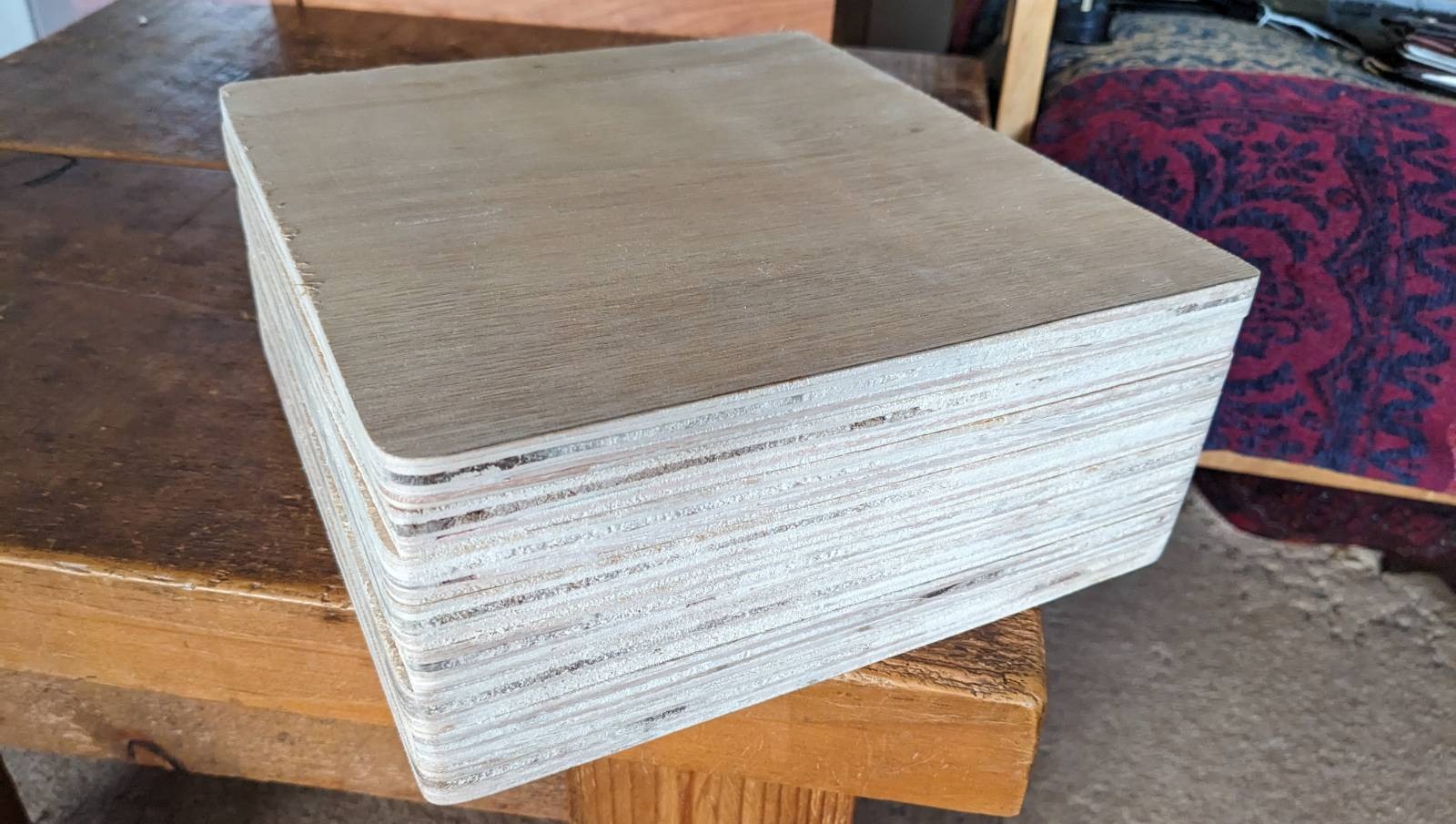

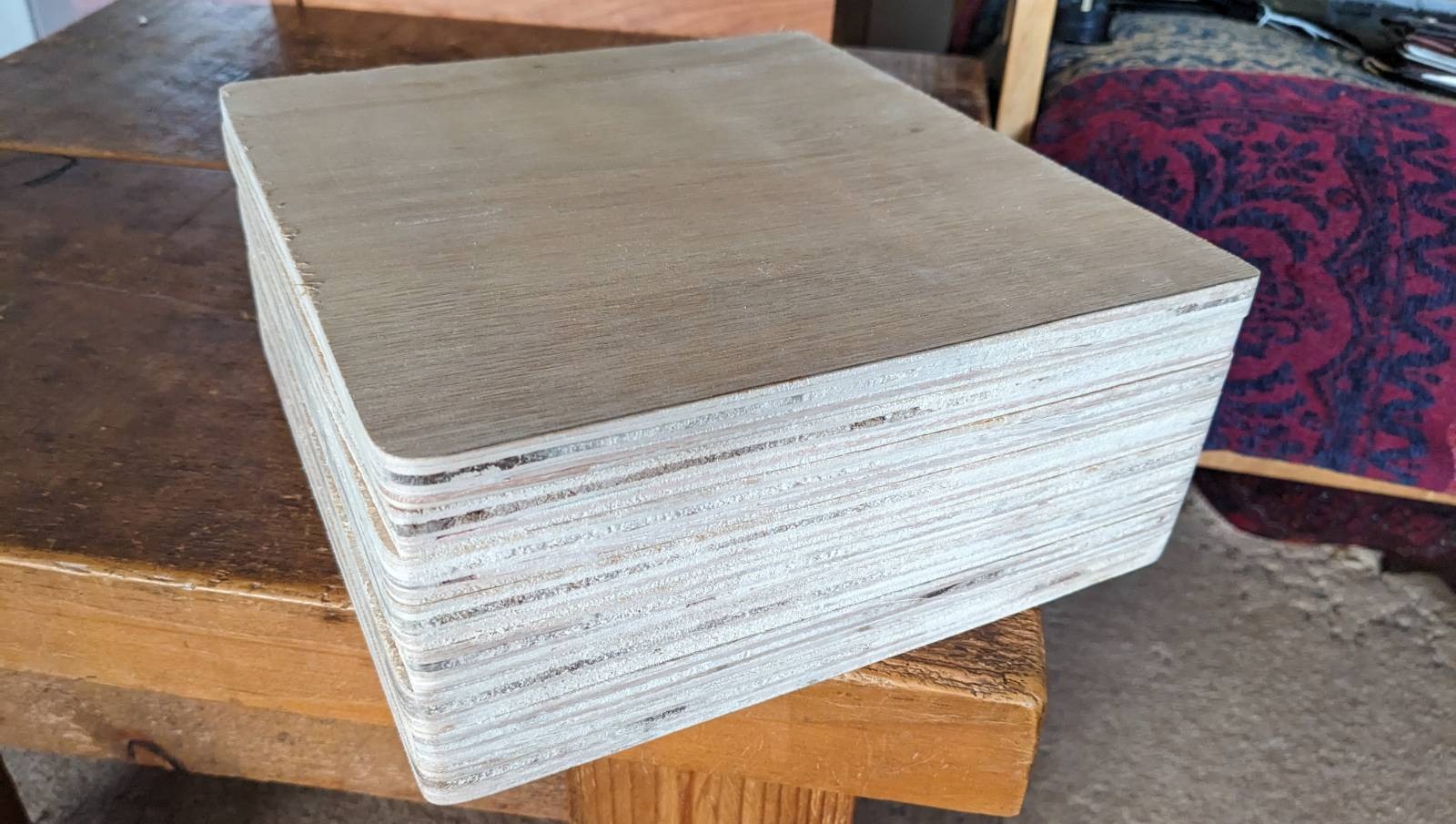

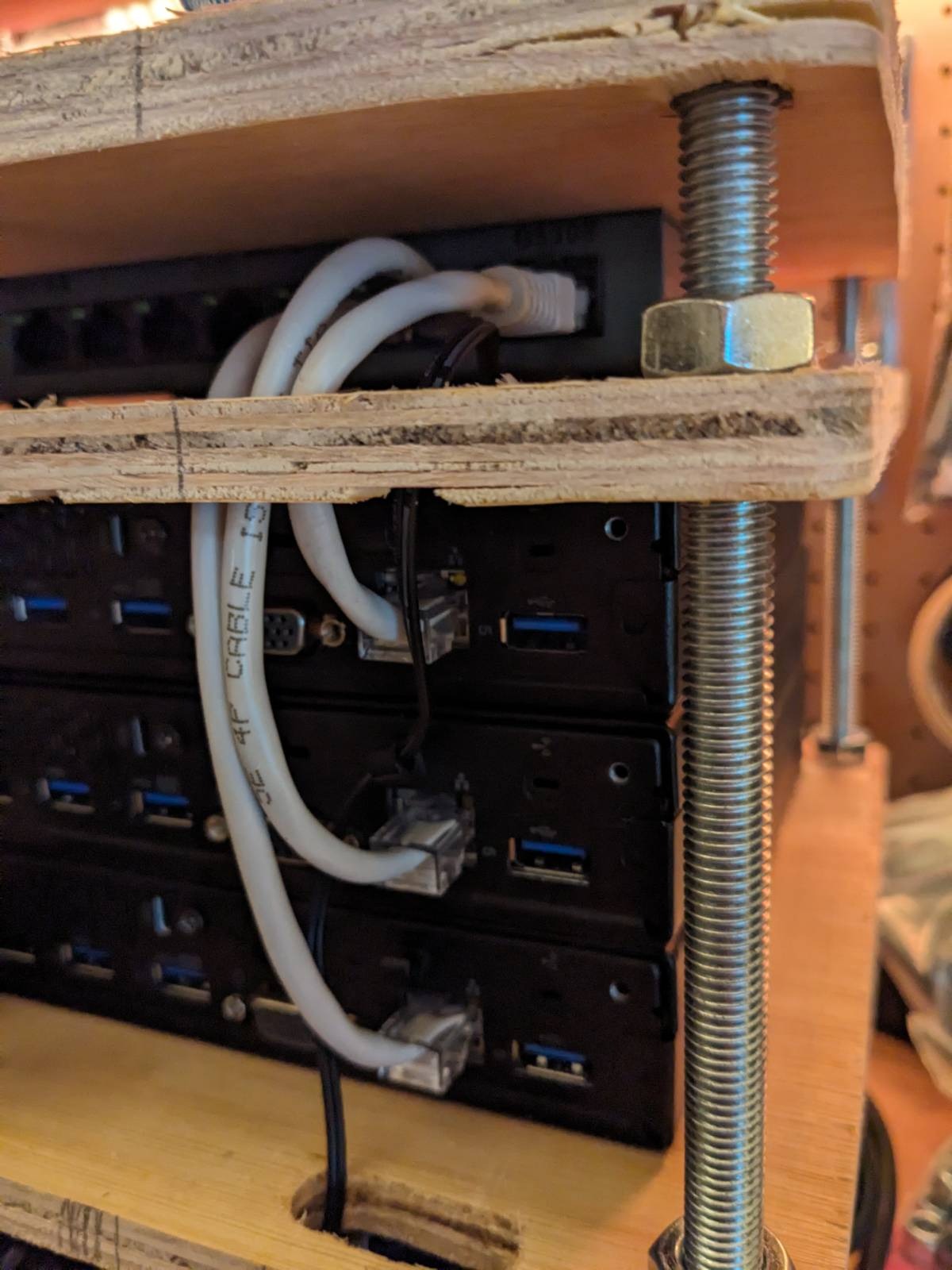

to keep the nodes, power supplies, switch and some other bits contained I made a small rack-tower from 12mm ply and M10 studding. its footprint is 230mm x 250mm and shelves can be added if more hardware is added later. it was possible to get 8 shelves from the offcut I bought, so there are a couple of spares. this stuff adds another £17 to the total.

|

|

holes in the corners of the shelves allow them to hang along the studding suspended by nuts. the components are held in place by the compression applied by the shelves above and below. this makes removing them more difficult than it needs to be, but it keeps the thing more compact.

|

|

|

|

now that the cluster has been up for a little while I can report that it does indeed get a little ugly when the vCenter server runs out of memory; after a reboot it thrashes and swaps for ages - during which time the web console is not accessible - until it eventually settles down and gets back on track. other than that it seems surprisingly okay with 10GB less memory than technically required. again, this RAM allocation hack is terrible and you shouldn't do it, but for experimentation purposes it has been pretty good.

as long as I stick to small Linux VMs and avoid rebooting the vCenter VM, everything chugs along fairly smoothly and will be a good place for experiments. adding shelves for extra mini PC nodes would be trivial, so rather than spending money on memory and storage for these three I will probably look for bundle deals on 11th or 12th gen computers when the time comes. I can recommend a project like this to anyone interested in learning the ropes of hypervisors and high availability - Proxmox and ESXi are both quite accessible given how powerful they can be.

an update: as of 02/2024 VMWare's licensing arrangement, and the free availability of vSphere, has got a lot worse. this seems like a prompt for me and others like me to spend more time with Proxmox, XCP-ng and other KVM/Xen based solutions instead. I will experiment with XCP-ng on this cluster soon.