aarch64 is good and x86 is rubbish, but one thing I have been unable to do on aarch64 so far is run a proper hypervisor. sometimes containers just don't cut it. all publicly available ARM cloud instances disable nested virtualisation extensions (although the Ampere chips they run on could probably support it if they wanted), and the more grunty ARM hardware is still prohibitively expensive. with the release of the Pi 5, I decided to buy one and see how many of the Proxmox/KVM home comforts can be made to work. I am happy to report that after some faffing, I have Proxmox running on the 8GB Raspberry Pi 5, with almost all of its usual features working as you'd expect.

there is no really large and obvious reason why this shouldn't just work. the underlying components of Proxmox (Qemu, KVM, LXC, ZFS) are all ubiquitous, well supported and at least some degree of tested and working on modern ARM ISAs. but there are some issues;

firstly, Proxmox does not support aarch64 or provide packages for same. the Pimox project provides a neat all-in-one installer and a repository of deb packages for the Pi 4, but something about the installer (as of 2025-01-11) does not support the Pi 5. this might be a simple version check or something more significant, but while looking around for alternative precompiled sources I found this Chinese project and accompanying repo. they report having "a large number of Kunpeng and Loongson users" running their packages in production, implying to me that some work on their part has been put into making the whole PVE ecosystem work properly on aarch64. they are even working on RISC-V! Reddit user u/[deleted] helpfully states that he wouldn't touch this repo with a 30.48m pole, but doesn't provide any reasoning beyond nebulous implied xenophobia, so onward we go.

I found that reading and manually following the steps from Pimox's install script (but using the Jiangcuo repo instead) allowed Proxmox to install painlessly, and after a reboot I was greeted with the familiar web console at port 8006. I have been this far with Rockchip hardware before though, and ran into showstopping problems with ZFS versions, KVM kernel module compatibility, and UI crashes when these components did not line up with what Proxmox was expecting to find on the system.

on the Pi, there are no such problems. one quirk is that the q35 machine type (x86 only) is still available as an option in the VM creation dialogue, and indeed it is selected as the default in some situations. this leads PVE to generate VMID.conf files and thus kvm commands which contain x86 specific args flags which cause errors, even after the machine type is set to something compatible. more on that later.

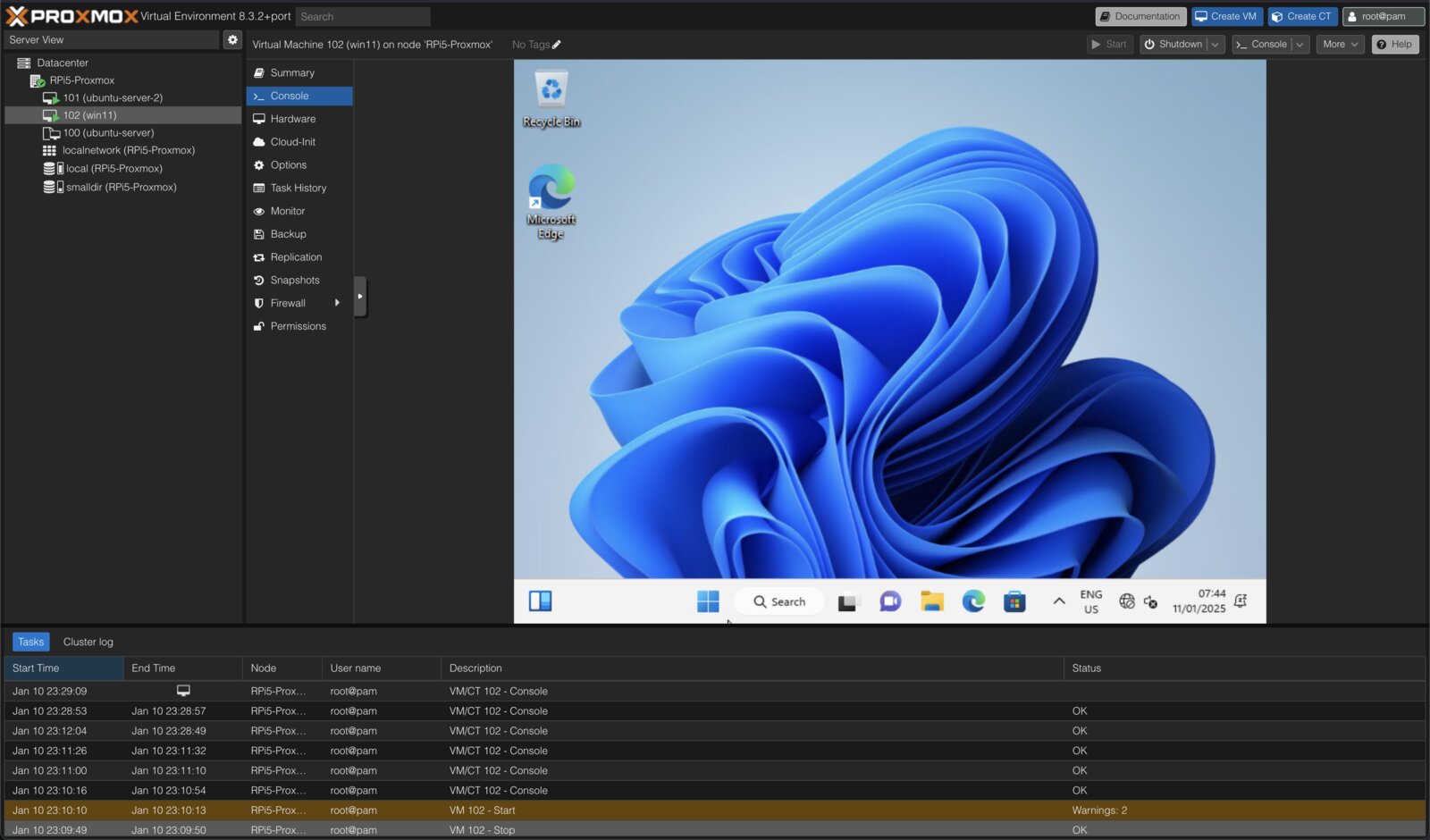

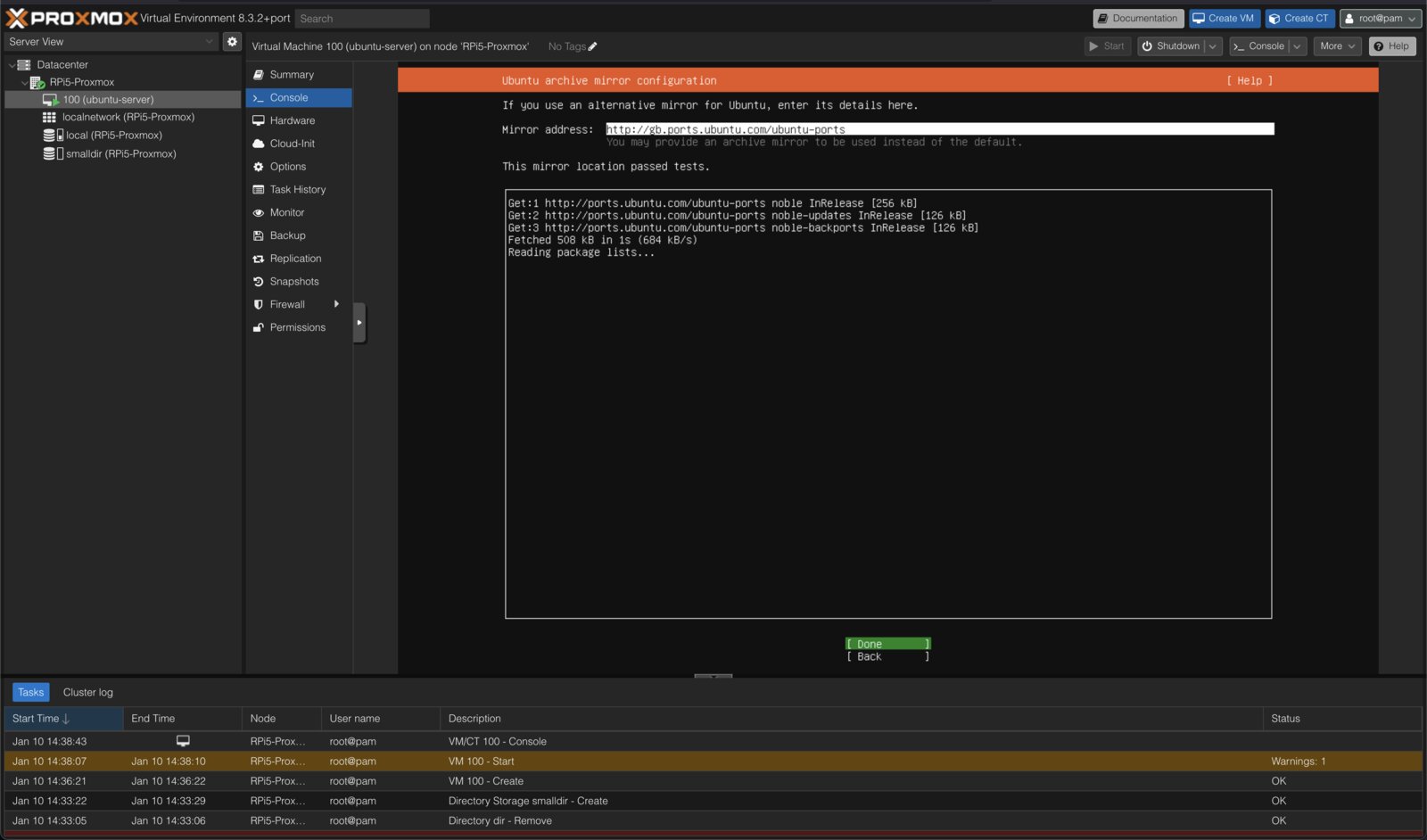

as long as you stick to OVMF UEFI and the virt machine type though, all is well. full-fat linux VMs (Ubuntu Server 24.04 in my testing) can be created, templated, snapshotted and backed up in the usual way. ballooning memory works, as does USB passthrough and web VNC. CPU usage on the Pi is totally reasonable and many concurrent VMs run well. I was pleased at this stage, and moved on to Windows guests.

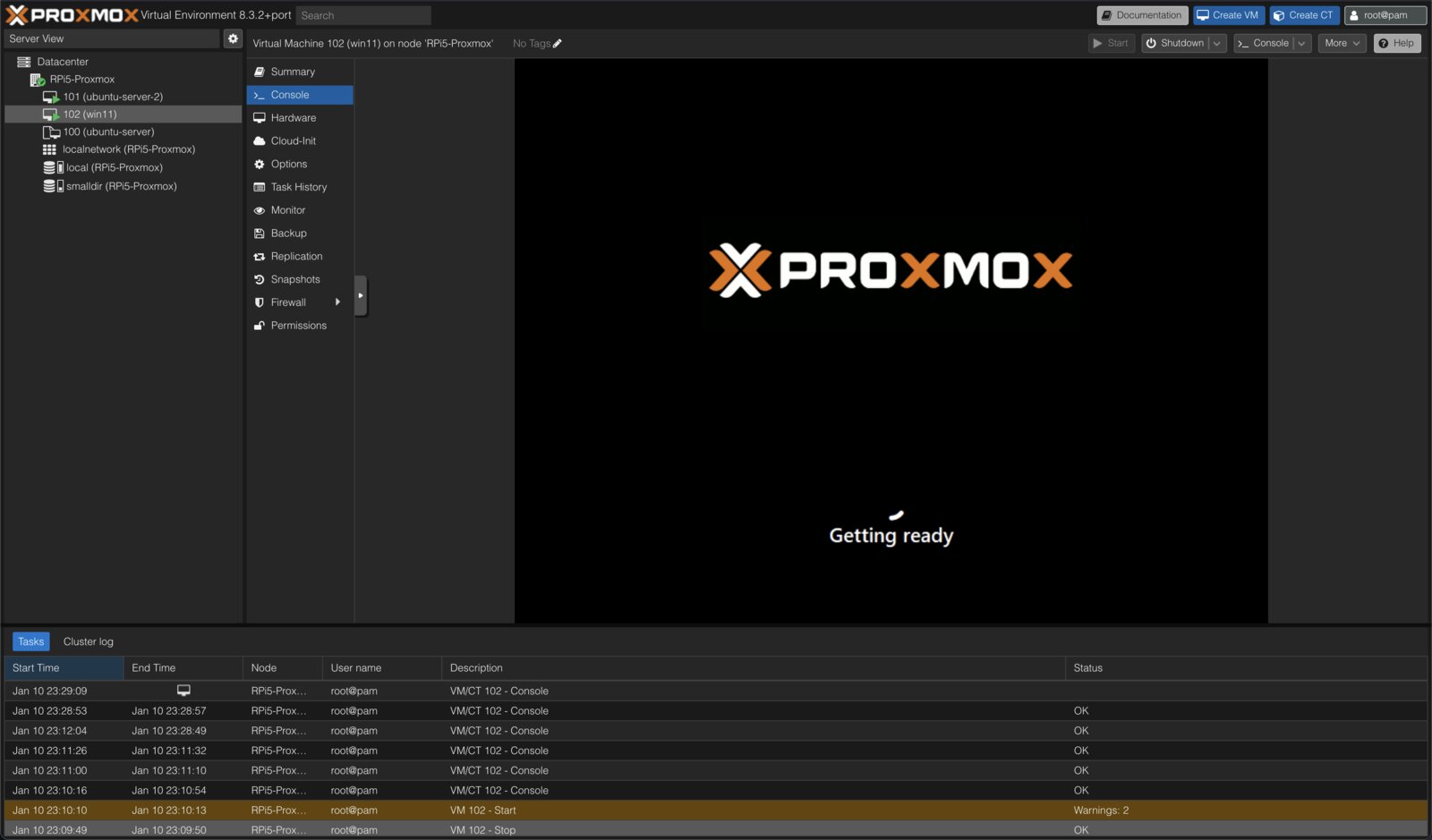

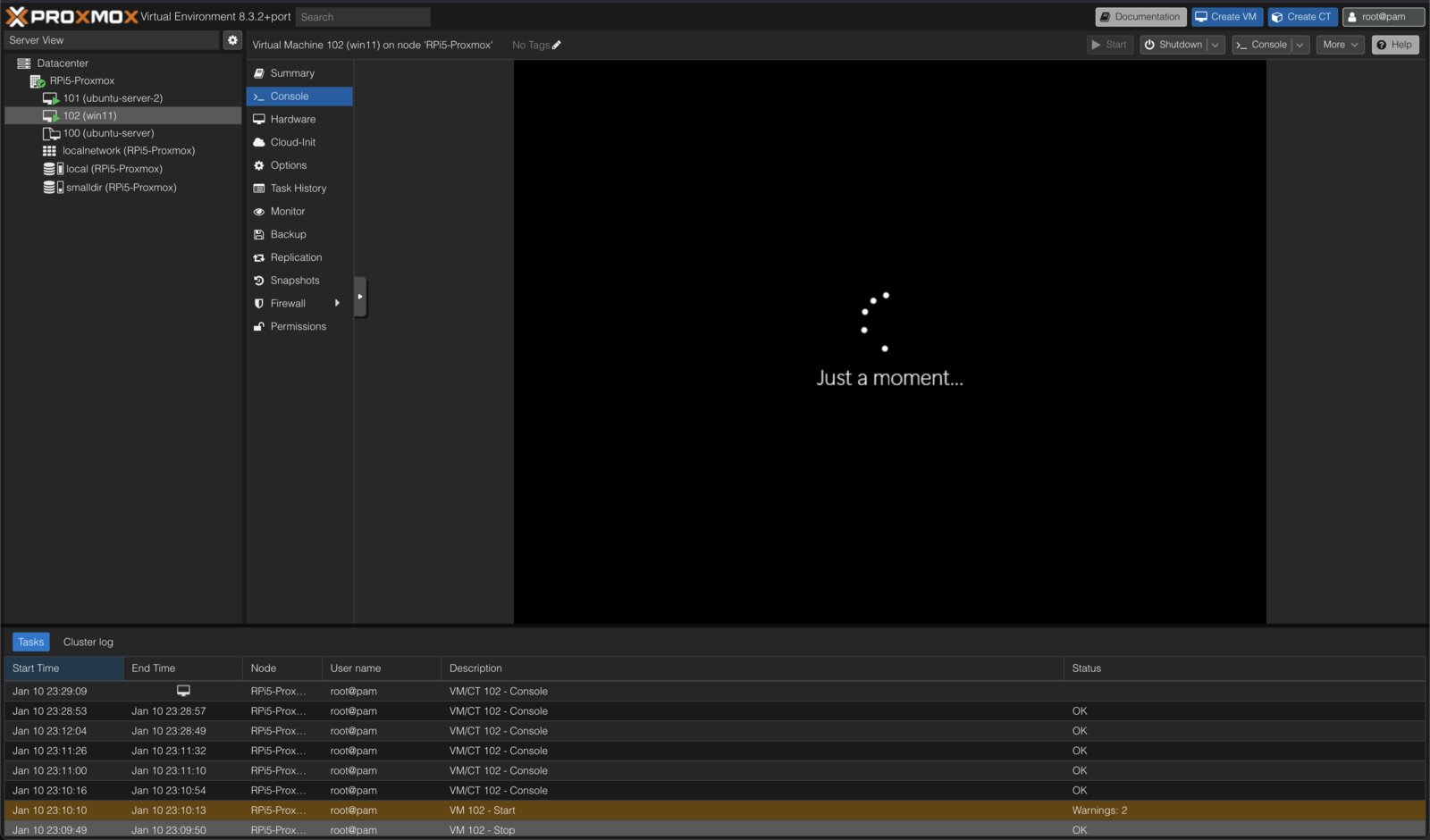

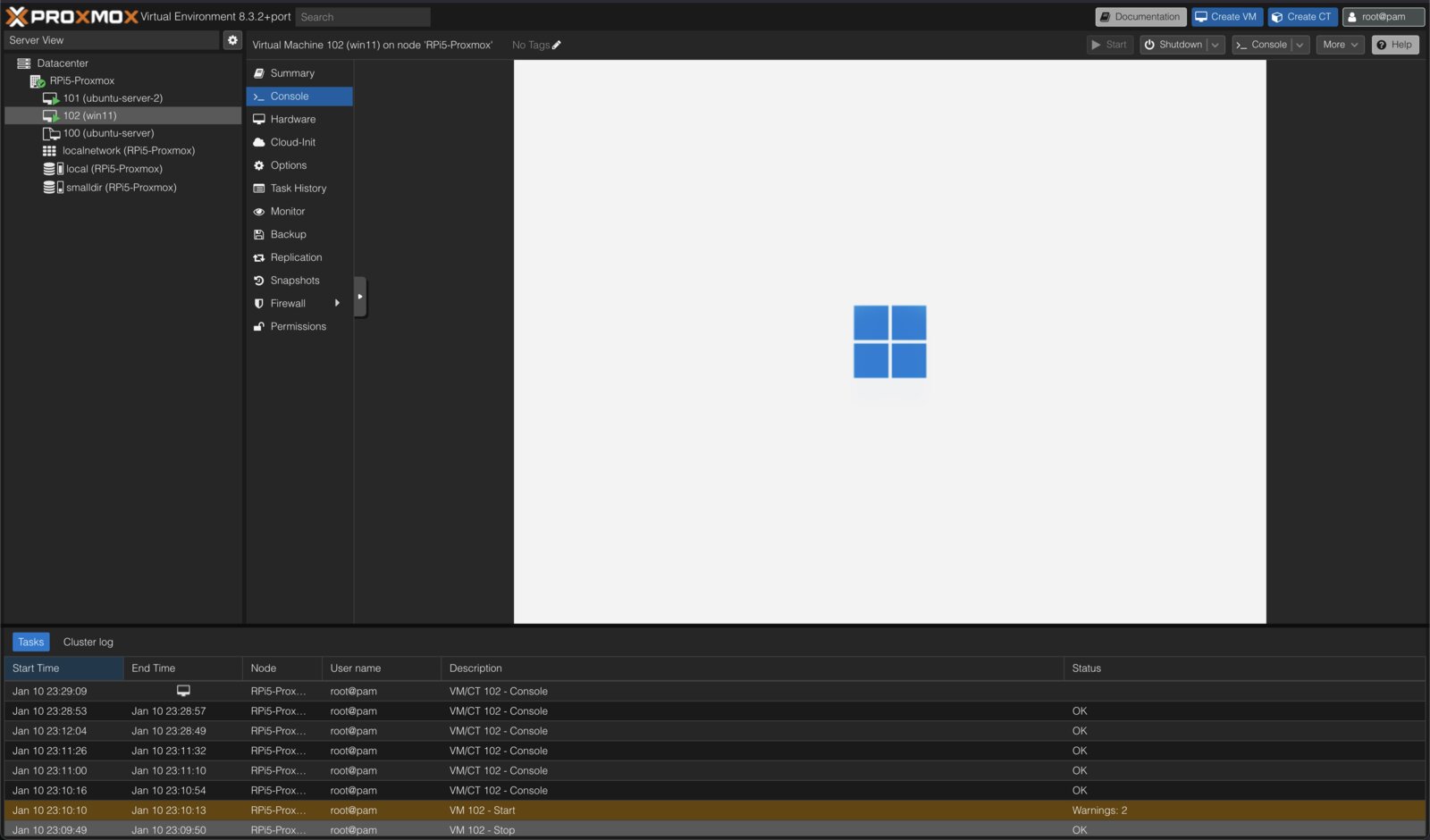

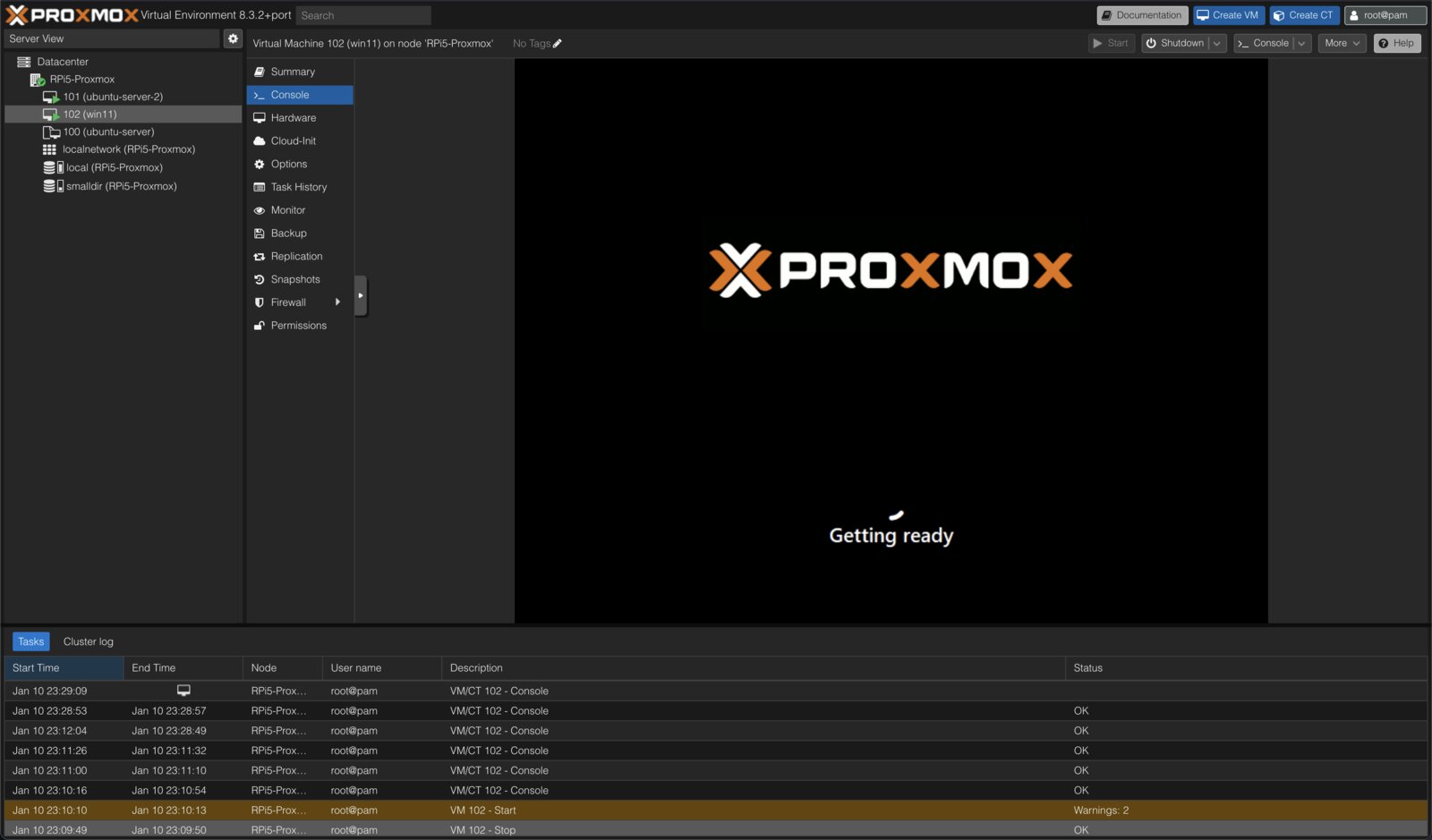

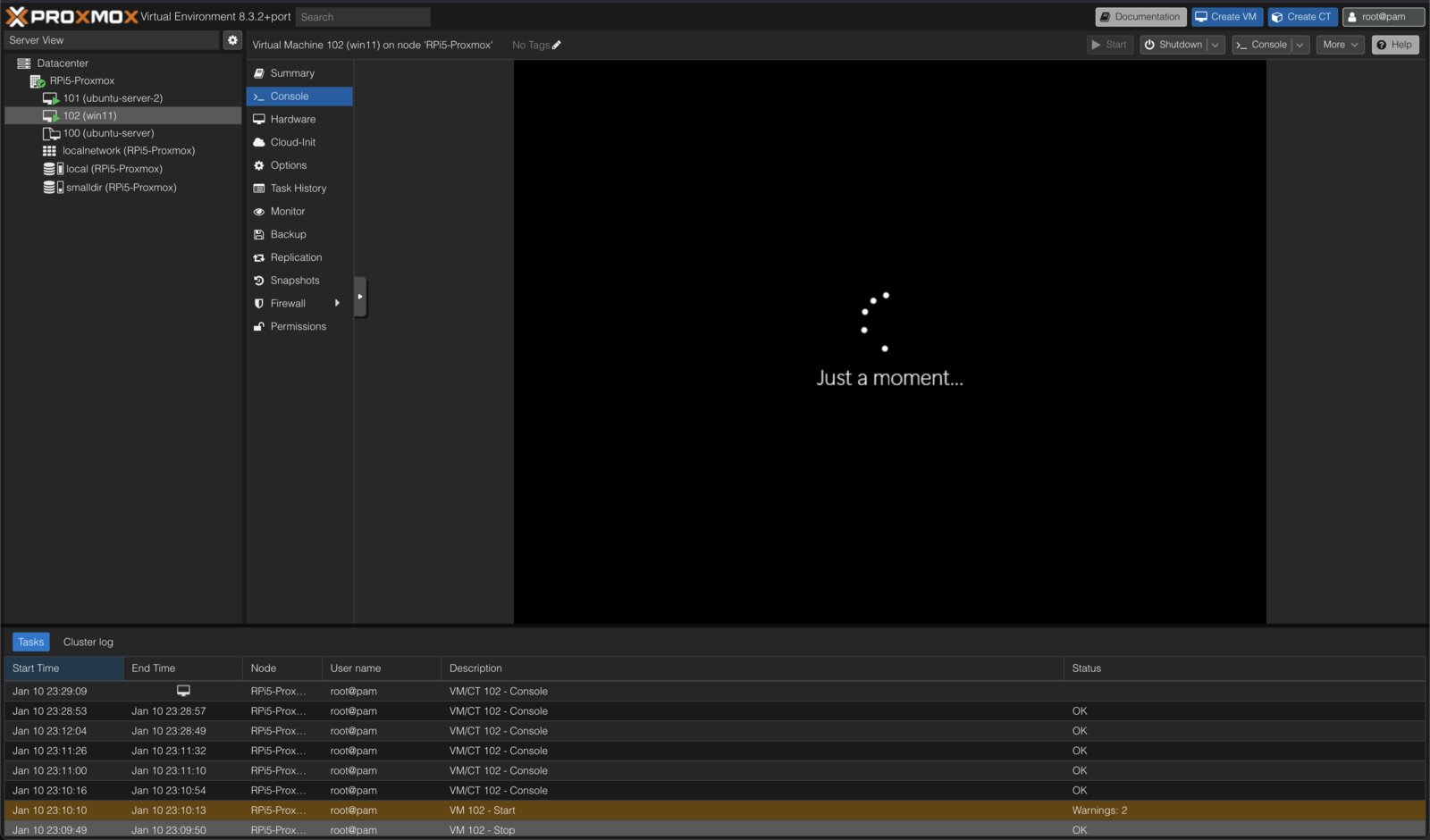

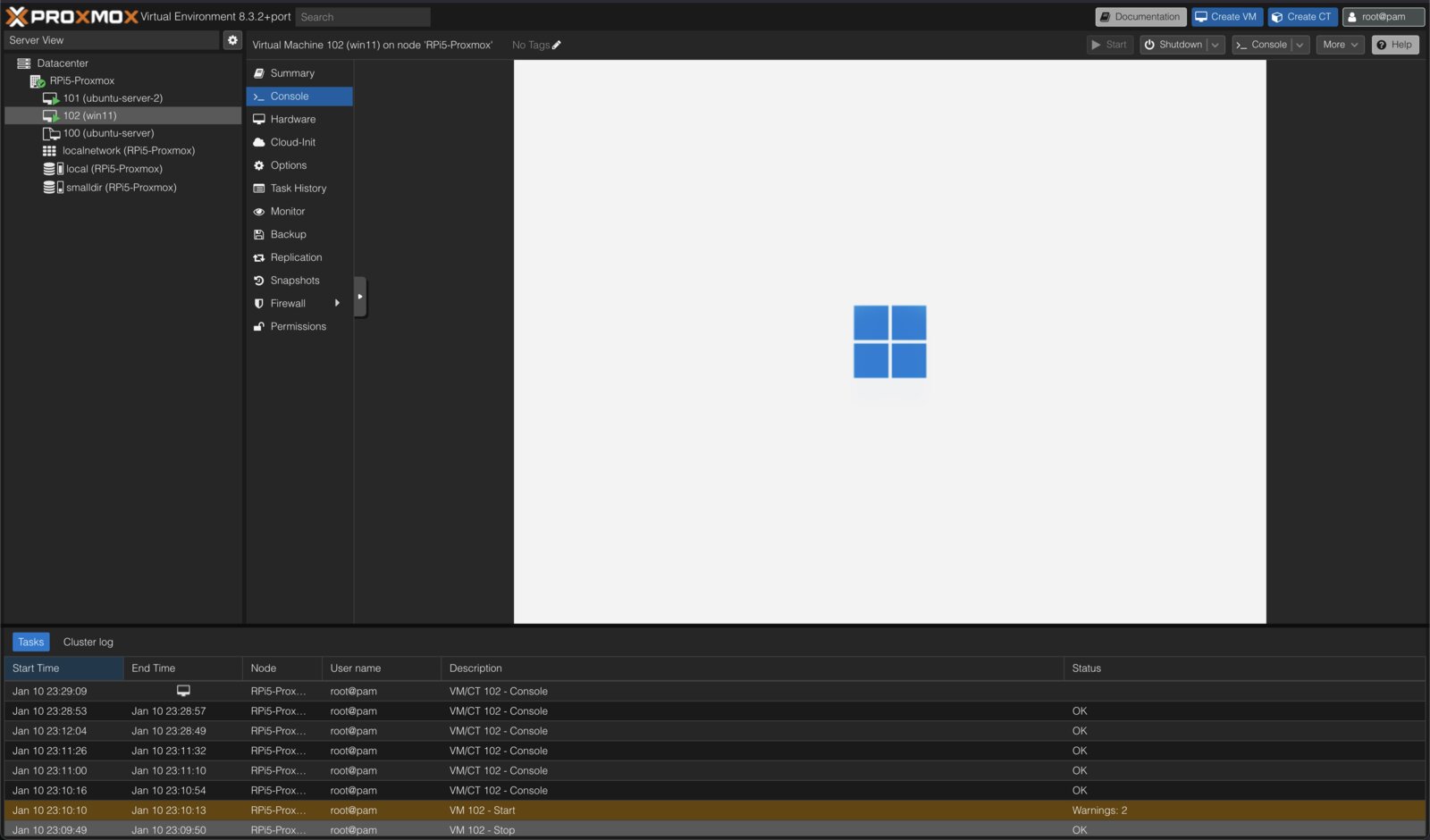

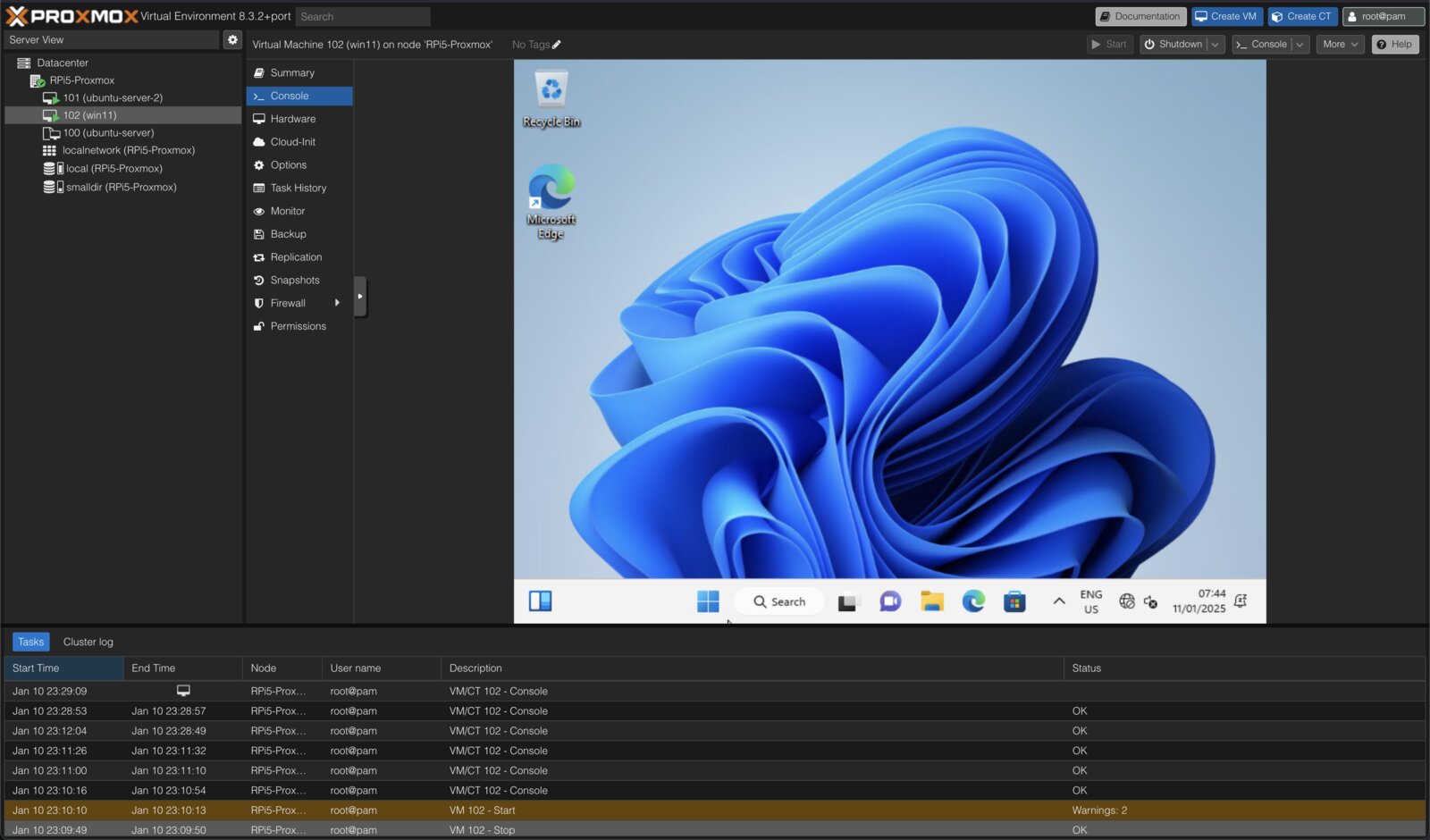

this is rather less idiot proof, but after an evening of messing about Windows 11 on ARM is running in a Qemu/KVM virtual machine, launched and managed through entirely the Proxmox web console. here is how it is done, a few of the sticking points, and some strategies for debugging.

after creating the VM with an attached Windows installer .iso in the web console and what seem like sensible hardware settings, it didn't start up. depending on which hardware options were chosen the error behaviour was a little different, but never particularly enlightening. after VM creation there is a lot of extra hardware customisation possible in the web console, but which combination of these options is needed (and applied in what order!!) is not obvious without some extra information. rather than list the options I found to be working, I'll describe my method for arriving at them.

when you configure a VM in the web console, your chosen hardware configuration is saved as /etc/pve/qemu-server/VMID.conf. when you hit "start", this file is parsed out into a corresponding kvm command with all your specified options, and a load of extras/defaults. it is not immediately clear which options/flags are being set in your vm.conf when you make changes in the web console, or which command line flags they might be translated to at runtime.

ignoring the web console for a minute then, qm showcmd VMID --pretty will perform a dry-run of the process I just described; parsing the vm.conf into a kvm command and outputting it for inspection. here, you can look out for things which are obviously x86-specific (any hyper-V options! HPET!) and anything else which looks problematic or lines up with the error messages you get when it runs. modify the vm.conf (either by hand or by changing things in the web console) and run showcmd again to see how the different variables affect the result. you can run these kvm commands manually and log any errors. I noted that the args: section of the vm.conf will take literal flags to be passed into the kvm command, while options like machine: and ostype: will take only PVE-specific strings and will add more than just a single flag to the generated command, sometimes changing it dramatically (in the case of machine type for instance). it's usually relatively obvious which web console options affect which parts of the vm.conf too. this was a useful discovery.

|

|

|

|

the vm.conf I ended up with:

args: -d guest_errors -D /tmp/102.log

bios: ovmf

boot: order=scsi0;scsi2

cores: 2

kvm: 1

localtime: 0

machine: virt-9.2

memory: 2048

meta: creation-qemu=9.2.0,ctime=1736543088

name: win11

net0: virtio=BC:24:11:1D:BC:07,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: working

sata0: local:iso/virtio-win-0.1.266.iso,media=cdrom,size=707456K

scsi0: smalldir:102/vm-102-disk-0.qcow2,iothread=1,size=32G

scsi2: smalldir:iso/w11.iso,media=cdrom,size=4375488K

scsihw: virtio-scsi-pci

smbios1: uuid=23d73179-c00e-4046-905d-bfe504b5c336

sockets: 1

vga: ramfb

the kvm command this generates:

/usr/bin/kvm \

-id 102 \

-name 'win11,debug-threads=on' \

-no-shutdown \

-chardev 'socket,id=qmp,path=/var/run/qemu-server/102.qmp,server=on,wait=off' \

-mon 'chardev=qmp,mode=control' \

-chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' \

-mon 'chardev=qmp-event,mode=control' \

-pidfile /var/run/qemu-server/102.pid \

-daemonize \

-smbios 'type=1,uuid=23d73179-c00e-4046-905d-bfe504b5c336' \

-drive 'if=pflash,unit=0,format=raw,readonly=on,file=/usr/share/pve-edk2-firmware//AAVMF_CODE.fd' \

-drive 'if=pflash,unit=1,id=drive-efidisk0,format=raw,file=/tmp/102-ovmf.fd,size=67108864' \

-smp '2,sockets=1,cores=2,maxcpus=2' \

-nodefaults \

-boot 'menu=o_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=100' \

-drive 'file=/mnt/pve/smalldir/template/iso/win11.iso,if=none,id=drive-scsi2,media=cdrom,format=raw,aio=io_uring' \

-device 'scsi-cd,bus=scsihw0.0,channel=0,scsi-id=0,lun=2,drive=drive-scsi2,id=scsi2,bootindex=101' \

-device 'ahci,id=ahci0,multifunction=on,bus=pcie.0,addr=0x7' \

-drive 'file=/var/lib/vz/template/iso/virtio-win-0.1.266.iso,if=none,id=drive-sata0,media=cdrom,format=raw,aio=io_uring' \

-device 'ide-cd,bus=ahci0.0,drive=drive-sata0,id=sata0' \

-netdev 'type=tap,id=net0,ifname=tap102i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' \

-device 'virtio-net-pci,mac=BC:24:11:1D:BC:07,netdev=net0,bus=pcie.0,addr=0xb,id=net0,rx_queue_size=1024,tx_queue_size=256' \

-machine 'type=virt-9.2+pve0,gic-version=host' \

-d guest_errors \

-D /tmp/102.log

on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' \

-vnc 'unix:/var/run/qemu-server/102.vnc,password=on' \

-cpu host \

-m 2048 \

-readconfig /usr/share/qemu-server/pve-port.cfg \

-device 'qemu-xhci,id=ehci,bus=pcie.0,addr=0x1' \

-device 'qemu-xhci,p2=15,p3=15,id=xhci,bus=pcie.1,addr=0x1' \

-device 'usb-tablet,id=tablet,bus=ehci.0,port=1' \

-device 'usb-kbd,id=keyboard,bus=ehci.0,port=2' \

-device ramfb \

-device 'virtio-balloon-pci,id=balloon0,bus=pcie.0,addr=0x3,free-page-reporting=on' \

-device 'virtio-scsi-pci,id=scsihw0,bus=pcie.0,addr=0x5' \

-drive 'file=/mnt/pve/smalldir/images/102/vm-102-disk-0.qcow2,if=none,id=drive-scsi0,format=qcow2,cache=none,aio=i

with minimal distributions like Alpine that run with only 256MB of memory and Proxmox's samepage sharing feature, the Pi 5 can run dozens of lightweight VMs. for automated/sandboxed version testing of aarch64 software components this could be really useful, and orders of magnitude cheaper than other options. I am going to keep this Pi around as a VM host, and try to resist the urge to add more to a cluster. I will now always default to ARM-based cloud hosting where possible, so having a system like this for testing things is super useful. long live aarch64.